AutomataGPT

Forecasting and ruleset inference for 2D cellular automata, from the AutomataGPT paper (Advanced Science, under revision).

Forecasting and ruleset inference for 2D cellular automata, from the AutomataGPT paper (Advanced Science, under revision).

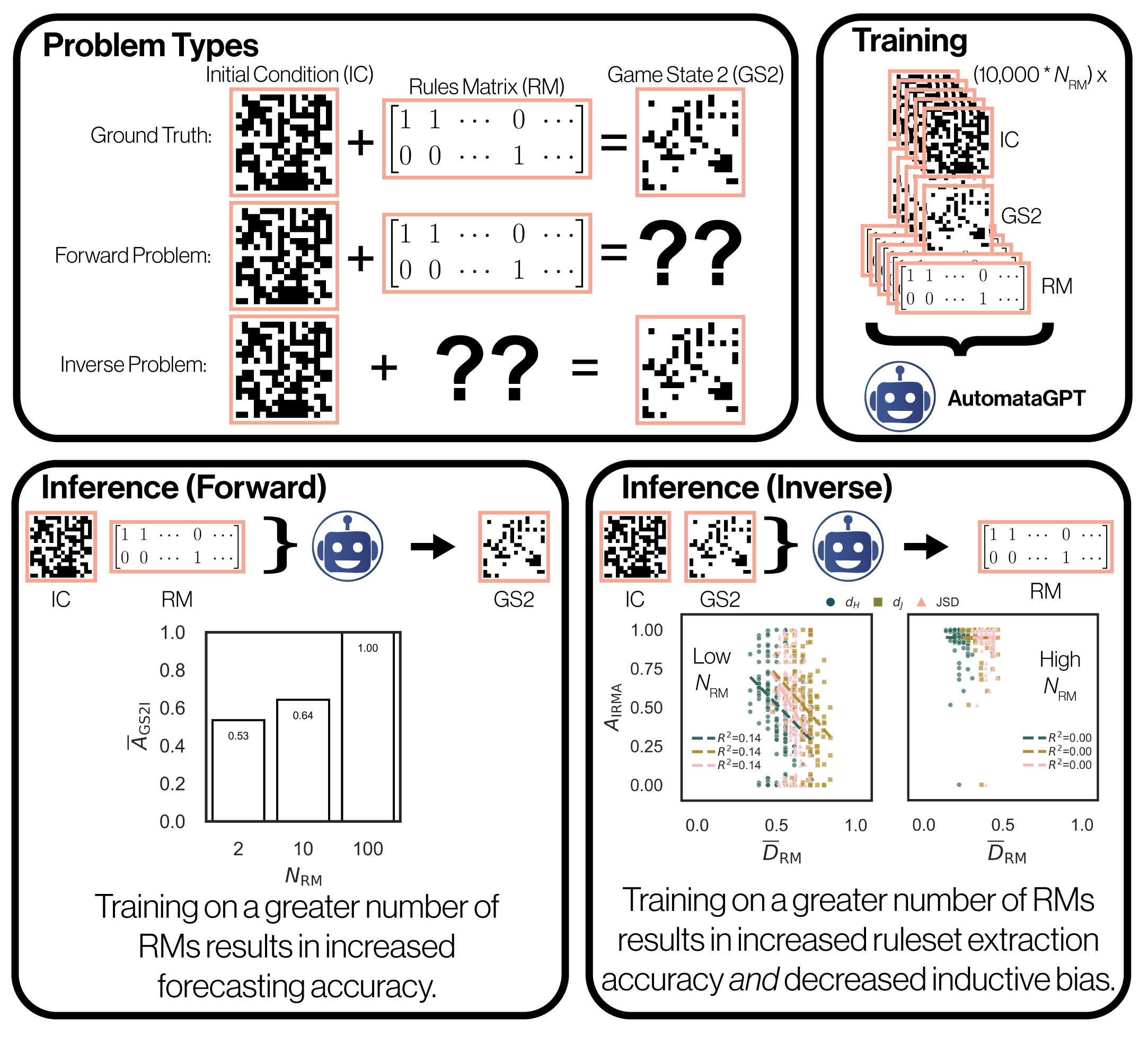

AutomataGPT pairs a causal transformer forecaster with a ruleset inference head to learn cellular automata dynamics directly from rollouts. Trained across diverse 2D rules and neighborhoods, the model delivers long-horizon stability while recovering hidden transition rules from short sequences.